Building delivery pipeline for .Net Web Applications with Jenkins and Psake

I’m going to review the steps needed to implement continuous integration pipeline for .Net web project with Jenkins. The article is intended to serve as base on how to automate .Net projects with Jenkins and Psake. It helps to align the bunch of the tools and components that are part of the complex CI process and reviews a setup from the scratch. The idea behind the process is full automation of building, testing and publishing Microsoft .Net web application.My CI list of compounds is:

- MVC.Net Project

- A bunch of other various .net projects

- Jenkins for CI Server

- Windows 2008 R2 SP1 OS on the build host

- Psake for building automation tool, that is based on Powershell

- Subversion for SCM system

As you can see on the schema below the pipeline process is triggered by a commit change in the subversion SCM.

The trigger for this is a commit of code in SCM (source code management) system that occurs in the source control repository. Then few jobs related in pipeline are run on Jenkins.

· Source code is compiled. This job might be comprised of building different type of .Net projects

· Unit tests are executed. A threshold for code coverage has been checked with code coverage tool like NCover or MSTest.

· Target application (which in my case is Web application) is published to Front-End server along with clean database setup on the Back-End server.

· A bunch of acceptance(integration) Selenium Tests are executed against the newly published application

Even one of these pipeline jobs fails, the rest of the pipeline jobs are cancelled (they never get started) and email notifications are sent to people involved in build process and includeded revisions’ owners. This leaves really small room for failing continuously the delivery pipeline without identifying the problem. Technically, every commit produces a testable output, which at the end of the delivery pipeline is ready for manual testing, load testing and sign off for publishing.

Another option is to use scheduled poll that triggers the process. It has some disadvantages compared to hook trigger for each of the commits. Scheduled poll may comprise different revisions and potentially many of them might be the reason for failing the delivery pipeline. This makes the identification of the problems a bit harder.

automated process schema

The first part of the delivery pipeline, which is responsible for building the source code generates the output called artifacts. All artifacts should be used for the rest of the jobs in the running instance of the delivery pipeline. This guarantees the pipeline is not compromised and no “dirty” code changes are included between the executions of separate pipeline jobs. In the given scenario that i review, where unit and selenium tests are executed the artifacts are:

- The package generated when publishing is done with Visual Studio. This is pretty much the bin folder, all pages, JavaScript files, etc. Its content may vary depending on the type of the web application and used libraries and frameworks

- Bin\Debug|Release of unit tests projects along with *.nunit project file

- Bin\Debug|Release of unit tests wrappers for selenium tests along with *.nunit project file. The assumption here is that selenium tests are been exported to C# code that is executed through NUnit tests

- SSDT (http://msdn.microsoft.com/en-us/data/tools.aspx) Bin\Debug|Release folder in case SSDT is used for creating database. Otherwise, all related *.sql scripts (no matter how they are maintained) must be treated as artifacts.

For more details about generating and sharing artifacts refer “BUILD STAGE” article.

How to build the backbone of the delivery pipeline

At the beginning you have to start with installing the pieces that will allow you to create empty Jenkins jobs that run successfully with Psake, when a code change is committed in the source control version you use.You have to create few Jenkins jobs, to link them in pipeline and each of the jobs must execute empty Psake task. After that you can put “flesh” on the top of the jobs, which is subject of the other articles.

Below is a breakdown of the steps that build the backbone of the delivery pipeline:

1. Install Jenkins

Download the Windows native package and install Jenkins CI server. It is installed as windows service. Make sure it is started and access Jenkins interface to its default address http://localhost:8080/ on the build server. By default the access is anonymous, so the first things you have to figure out are what kind of authentication you want for your CI server, and what the access rights for your users will be.

The setup can be done through the menu “Manage Jenkins” -> “Configure Global Security”

In my case I have 2 users defined. One is full privileged “build master” user. The other one is called “scripts”. As you can see it has very limited privileges – “Overall Read”, “Job Read”. That is sufficient for starting the upstream job on commit via hook. “Scrips” user will be used later in the post-commit hook for authenticating against Jenkins and triggering instance of the delivery pipeline.

The anonymous user is technically disabled by revoking all access rights that is has by default.

After you do the security setup and logout, you will be asked for login credentials for any further log in in Jenkins.

2. Create jobs in Jenkins

In my case the delivery pipeline is comprised of 3 related Jenkins jobs. It is important to make a note that all jobs in the delivery pipeline share the very same custom Workspace.The custom workspace path is located in “C:\CI”, and that overrides the default workspace locations in “Program Files (x86)”.

The custom workspace settings can be set in job definition “Advanced Project Options”, “Advanced” button next to the section.

Jenkins default root path definitions can be found under “Manage Jenkins” -> “Configure System”, “Advanced” button. It points to “Program Files (x86)”, where is default install location of Jenkins:

Because most of the paths that are used in Jenkins plugins are relative to the root workspace folder for the job, it is important all pipeline jobs to share the same custom workspace folder path. Thus, all artifacts can be placed under common accessible “Artifacts” folder and can be reused among all jobs in the pipeline.

Here are the pipeline jobs:

- Build Stage – the source code is checkout from the given svn repository. The Visual Studio solutions are cleared and built again in the required order. Artifacts have been archived.

- Commit Stage – the artifacts from the upstream job, which indeed is the “Build Stage”, are copied in the dedicated folder called Artifacts, under a folder named with the SVN revision that triggered the pipeline instance.

That means svn revision number 19999 “produces” artifacts that are shared among Build, Commit, Acceptance stages and are located in C:\CI\Artifacts\19999. “C:\CI” is the custom workspace path.

After artifacts are copied, a set of unit tests are run. If one of the tests fails, the job instance is failed too. That automatically fails the entire instance of the delivery pipeline. Email notifications are sent to the people involved in changes that failed the build. NUnit report is generated and published to the job instance regardless of the tests executions.

After NUnit tests execution, NCover is used to measure the code coverage against defined threshold. Coverage report has been generated and published to the job instance, so it is accessible through the Jenkins GUI.

Details for commit stage can be found here: “COMMIT STAGE”.

- Acceptance Stage

- First the web application is published to dedicated front-end server via powershell remoting. After that from the same revision, SSDT project is built and its output is used for publishing the database that corresponds to the web application source. The database is created on dedicated DB server again via powershell remoting.

- After publishing is done, the selenium tests are executed through NUnit. Its config files are dynamically updated to point to the location where the application is published.

Jenkins jobs definitions are located under “jobs” folder in its installation path. For each of the jobs in sub-folders are persisted the latest stable and successful builds, artefacts and instances history.

For most of the tasks you can find existing Jenkins plugins, which only have to be downloaded, installed and configured. I will review the plugins I use in the latter articles devoted to the different Jenkins jobs, which are part of the delivery pipeline

3. Integrate Psake and make related jobs exchange data you need

In my opinion the leading (most valuable) information related to a pipeline is the revision number that triggers the pipeline. It can be used for troubleshooting, for deciding which version to roll out on production, etc. That’s why, at the end of the pipeline the artifacts and deployment packages should be placed in a folder named with revision number that originates the build.

“Build Number” and “Build ID”, which are tightly related to the delivery pipeline instance and running jobs instances in it, are internal information that pertains more to the Jenkins as CI server rather than to delivery pipeline as “human meaning” process with expected output. At the end of the day “revision 1234” means more to the project team than “build 1534”.

Luckily the revision number in Jenkins is related as global variable for the jobs that are linked to polling subversion. You can see the list of global variables available in Jenkins on http://localhost:8080/env-vars.html/? (This is the default address accessible on the Jenkins server). According to the list the revision number is accessible in Jenkins as $SVN_REVISION, and it will be used as “shared” value between all jobs in one pipeline instance.

Well, back to the subject – how to install Psake and make it work with Jenkins?

- Download Psake package and unzip it in the Workspace root folder C:\CI. Its name as you can see from the screenshot below is “psake-master”.

- “Source” folder in the workspace root C:\CI is where your source will be checkout and updated by Jenkins. It’s been set up in the build job source code management settings panel.

- Copy the script called “psake.ps1” from the “psake-master” folder and place in C:\CI. All future modifications on this file, related to Psake integrations with Jenkins will be done in this file.

- Create one empty powershell file for each of the 3 jobs (stages) that will be linked in delivery pipeline - buildstage.ps1, commitstage.ps1, acceptancestage.ps1. Create one empty bat file that is going to be the entry point from Jenkins and will be the bridge between Jenkins jobs execution and Psake powershell scripts invocations. Name this file build.bat.

- In each of the Jenkins jobs created in 2) add a build action which is “execute windows batch command” and put in the command name field “build.bat” that was just created.

- In build.bat file call psake.ps1. The connection between Jenkins and Psake is done!

@echo off

powershell -NoProfile -ExecutionPolicy Bypass -Command "& '%~dp0\psake.ps1' -framework 4.0 -parameters @{revision='%REVISION%';stage='%JOB_NAME%'};if ($psake.build_success -eq $false) { exit 1 } else { exit 0 }"

exit /B %errorlevel%

here is the body of the psake.ps1

# Helper script for those who want to run psake without importing the module.

# Example:

# .\psake.ps1 "default.ps1" "BuildHelloWord" "4.0"

# Must match parameter definitions for psake.psm1/invoke-psake

# otherwise named parameter binding fails

param(

[Parameter(Position=0,Mandatory=0)]

[string]$buildFile = 'buildstage.ps1',

[Parameter(Position=1,Mandatory=0)]

[string[]]$taskList = @(),

[Parameter(Position=2,Mandatory=0)]

[string]$framework = '4',

[Parameter(Position=3,Mandatory=0)]

[switch]$docs = $false,

[Parameter(Position=4,Mandatory=0)]

[System.Collections.Hashtable]$parameters = @{},

[Parameter(Position=5, Mandatory=0)]

[System.Collections.Hashtable]$properties = @{}

)

try

{

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Start building project..."

Write-Host "scripts params..."

foreach ($h in $parameters.GetEnumerator()) {

Write-Host "$($h.Name): $($h.Value)"

}

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Solution Configuration:" $parameters.Item('buildConfig');

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Framework version: " $framework

remove-module .\psake-master\[p]sake

Import-Module .\psake-master\psake.psm1

$config = 'Release'

$revision = ''

$stage = ''

if ($parameters.ContainsKey('buildConfig'))

{

$config = $parameters.Item('buildConfig')

}

if ($parameters.ContainsKey('revision'))

{

$revision = $parameters.Item('revision')

}

if ($parameters.ContainsKey('stage'))

{

$stage = $parameters.Item('stage')

}

Write-Host $revision

Write-Host $buildFile

Write-Host $stage

$psake.use_exit_on_error = $true

switch ($stage)

{

'BUILD STAGE'

{

$buildFile = 'buildstage.ps1'

}

'COMMIT STAGE'

{

$buildFile = 'commitstage.ps1'

}

'ACCEPTANCE STAGE'

{

$buildFile = 'acceptancestage.ps1'

}

default

{

"'{0}' is not a valid stage. No job execution will be triggered upon this input." -f $stage

throw "'{0}' is not a valid stage." -f $stage

$buildFile = ''

}

}

invoke-psake $buildFile $taskList $framework $docs $parameters -properties @{config=$config; revision=$revision}

}

catch {

Write-Host -ForegroundColor "Red" -BackgroundColor "White" "Uexpected error."

Write-Host -ForegroundColor "Red" -BackgroundColor "White" $error[0]

}

finally {

remove-module .\psake-master\[p]sake -ea 'SilentlyContinue'

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "psake module successfully unloaded..."

}

Here are additional details on the implementation:

Build.cmd calls the psake.ps1 with parameters

-parameters @{revision='%REVISION%';stage='%JOB_NAME%'}

We can outline one difference related to how Jenkins global variables are accessible in the bat files.

$JOB_NAME in Jenkins should be referred as %JOB_NAME% in bat files.

Once psake1.ps1 is called, it becomes the global entry point for all Jenkins jobs. From here depending on the job context $JOB_NAME - buildstage.ps1, commitstage.ps1, acceptancestage.ps1 are called with passing the svn revision value.

buildstage.ps1:

Framework "4.0"

commitstage.ps1:# Framework "4.0x64"

properties {$code_directory = Resolve-Path .\Source\Src

$tools_directory = Resolve-Path .\Tools

$config = "Release"

$revision = ""

}

task default -depends Rebuild

task Rebuild -depends Clean,Build

task Clean {

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Clean task..."

}

task Build {

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Build task..."

}

Framework "4.0"

# Framework "4.0x64"

properties {

$code_directory = Resolve-Path .\Source\Src

$tools_directory = Resolve-Path .\Tools

$config = "Release"

$revision = ""

}

task default -depends UnitTests

task UnitTests -depends CommitStage

task CommitStage{

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Start CommitStage task..."

If ($revision)

{

}

else

{

Write-Host "Revision parameter for the Commit Stage job is empty. No artifacts will be extracted. Job will be terminated..."

throw "Commit Stage job is terminated because no valid value for revision parameter has been passed."

}

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Complete CommitStage task..."

}

acceptancestage.ps1:

Framework "4.0"

# Framework "4.0x64"

properties {

$code_directory = Resolve-Path .\Source\Src

$tools_directory = Resolve-Path .\Tools

$config = "Release"

$revision = ""

}task default -depends RunAcceptance

task RunAcceptance -depends DeployApplication, RunSeleniumNunitTests

task DeployApplication {

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Start DeployApplication task..."

If ($revision)

{

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Start deploying application..."

}

else

{

Write-Host "Revision parameter for the Acceptance Stage job is empty. No artifacts will be extracted. Job will be terminated..."

throw "Acceptance Stage job is terminated because no valid value for revision parameter has been passed."

}

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Complete RunSelenium task..."

}

task RunSeleniumNunitTests {

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Start Selenium Tests task..."

}

All staging psake scripts have the same parameters presenting the source code folder, the mode of the build (Build|Release), revision number and tools root folder.

Here is some useful information on how to integrate psake with Jenkins that was very helpful to me.

https://github.com/JamesKovacs/psake/wiki/How-can-I-integrate-psake-with-Hudson%3F

4. Develop commit hook that triggers the jobs in the delivery pipeline. Set up access permissions

For developing the post-commit hook I used the explanations from subversion plugin. Here is the link:

https://wiki.jenkins-ci.org/display/JENKINS/Subversion+Plugin

A bat file calls the visual basic script hook.

SET REPOS=%1

SET REV=%2

SET CSCRIPT=%windir%\system32\cscript.exe

SET VBSCRIPT=C:\svnhooks\post-commit-hook-jenkins.vbs

SET SVNLOOK=C:\Program Files (x86)\VisualSVN Server\bin

SET JENKINS=http://jenkinsIP:8080/

"%CSCRIPT%" "%VBSCRIPT%" %1 %2 "%SVNLOOK%" %JENKINS%

repos = WScript.Arguments.Item(0)

rev = WScript.Arguments.Item(1)svnlook = WScript.Arguments.Item(2)

jenkins = WScript.Arguments.Item(3)

Wscript.Echo "repos=" & repos

Wscript.Echo "rev=" & rev

Wscript.Echo "svnlook=" & svnlook

Wscript.Echo "jenkins=" & jenkins

svnlook = """C:\Program Files (x86)\VisualSVN Server\bin\svnlook.exe"""

repos = """C:\Visual SVN\Repositories\Project"""

Set shell = WScript.CreateObject("WScript.Shell")

Wscript.Echo svnlook & " uuid " & repos

Set uuidExec = shell.Exec(svnlook & " uuid " & repos)

Do Until uuidExec.StdOut.AtEndOfStream

uuid = uuidExec.StdOut.ReadLine()

Loop

Wscript.Echo "uuid=" & uuid

Set changedExec = shell.Exec(svnlook & " changed --revision " & rev & " " & repos)

Do Until changedExec.StdOut.AtEndOfStream

changed = changed + changedExec.StdOut.ReadLine() + Chr(10)

Loop

Wscript.Echo "changed=" & changed

Set authorExec = shell.Exec(svnlook & " author --revision " & rev & " " & repos)

author = authorExec.StdOut.ReadLine()

Wscript.Echo "author=" & author

Set dirsChangedExec = shell.Exec(svnlook & " dirs-changed --revision " & rev & " " & repos)

path = dirsChangedExec.StdOut.ReadLine()

Set objFSO=CreateObject("Scripting.FileSystemObject")

Wscript.Echo "path=" & path

nPos = InStr(1, path, "branches/branchName/SourceCode/", 1)

'todo: remove this section

'outFile="c:\test\test.txt"

'Set objFile = objFSO.CreateTextFile(outFile,True)

'objFile.Write "uuid=" & uuid & vbCrLf

'objFile.Write "changed=" & changed & vbCrLf

'objFile.Write "author=" & author & vbCrLf

'objFile.Write "path=" & path & vbCrLf

'objFile.Write "nPos=" & nPos & vbCrLf

'remove the author name restriction

if nPos = 1 then

Wscript.Echo "triggering build"

url = jenkins + "crumbIssuer/api/xml?xpath=concat(//crumbRequestField,"":"",//crumb)"

objFile.Write "url=" & url & vbCrLf

Wscript.Echo "url=" & url

Set http = CreateObject("Microsoft.XMLHTTP")

http.open "GET", url, False

http.setRequestHeader "Content-Type", "text/plain;charset=UTF-8"

http.setRequestHeader "Authorization", "Basic dGVzdDp0ZXN0" 'change the password (username:password) base64encoded; for example test:test ; no brackets

http.send

crumb = null

if http.status = 200 then

crumb = split(http.responseText,":")

Wscript.Echo "crumb=" & http.responseText

end if

Wscript.Echo "http.status=" & http.status

objFile.Write "http.status=" & http.status & vbCrLf

url = jenkins + "subversion/" + uuid + "/notifyCommit?rev=" + rev

Wscript.Echo url

objFile.Write "url=" & url & vbCrLf

Set http = CreateObject("Microsoft.XMLHTTP")

http.open "POST", url, False

http.setRequestHeader "Content-Type", "text/plain;charset=UTF-8"

http.setRequestHeader "Authorization", "Basic dGVzdDp0ZXN0"

if not isnull(crumb) then

Wscript.Echo "crumb(0)=" & crumb(0)

Wscript.Echo "crumb(1)=" & crumb(1)

http.setRequestHeader crumb(0),crumb(1)

http.send changed

if http.status <> 200 then

Wscript.Echo "Error. HTTP Status: " & http.status & ". Body: " & http.responseText

end if

Wscript.Echo "HTTP Status: " & http.status & ". Body: " & http.responseText

objFile.Write "http.status=" & http.status & vbCrLf

objFile.Write "http.responseText=" & http.responseText & vbCrLf

end if

end if

objFile.Close

The changes I did compared to the given vbs script in the plugin’s documentation is to add the authorization in the request. I used my scripts Jenkins user along with its password in format

Username:password

http.setRequestHeader "Authorization", "Basic dGVzdDp0ZXN0"

You can use online Base64 encode and decode tool like http://www.base64decode.org/

Another important thing that worths mentioning is that “Poll SCM” option in the build stage job must be checked in order the commit hook to trigger the build in Jenkins. You can put a pattern that runs very rare in the Schedule field.

At this point if you make a commit in the source control repository, the build job should be triggered. The rest of the jobs won’t be executed yet, because they are not related as downstream jobs to the build stage.

5. Relate all jobs from point 2. as upstream -> downstream jobs

Install the pipeline plugin and restart Jenkins. You can see that two other plugins are automatically installed:

- JQuery plugin

- Parameterized plugin

“Parameterized plugin” is used to link the jobs in a delivery pipeline.

- Link “Build Stage” to “Commit Stage”

In “Predefined parameters” section a new parameter “REVISION” is assigned with the value of global variable $SVN_REVISION.

- Link “Commit Stage” with “Build Stage” and “Acceptance Stage”

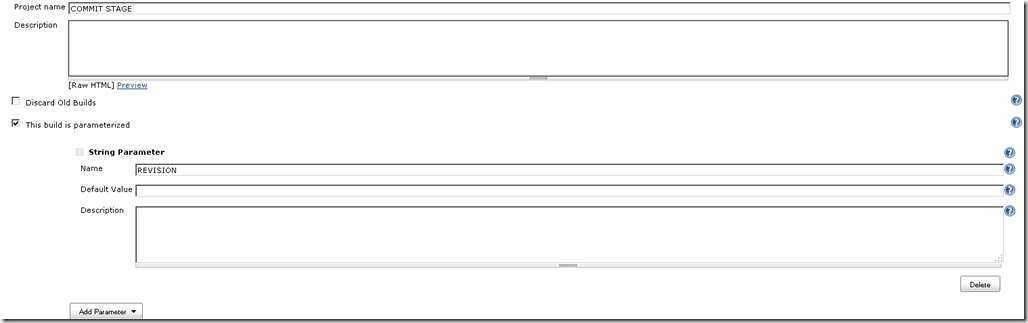

To receive the value of the svn revision that triggered the “BUILD STAGE”, you have to define the job as parameterized and to define the name “REVISION” as string parameter. It should be the same as the “predefined parameters” section in “BUILD STAGE”.

You can link the “ACCEPTANCE STAGE” to “COMMIT STAGE” alike above: post build action “Trigger parameterized build on other projects”.

- Link “Acceptance Stage” with “Commit Stage”

At this point the relation between the jobs is defiend.

“Build stage” is upstream project to “Commit stage”.

“Commit stage” is downstream project to “Build stage”.

“Commit stage” is upstream project to “Acceptance stage”.

“Acceptance stage” is downstream project to “Commit stage”.

6. Create pipeline view and make it default for your user profile, so you can view all related jobs as sequence

Create a new view of type “Build Pipeline View”

Define its properties and set up initial job to be “Build stage”.

Make the new view default for your profile, so when you click to “My Views” it will be loaded by default. Just point the name of newly created pipeline in your profile “My Views” -> “Default View” field.

At this point the backbone of your delivery pipeline is built. You can commit a change, the source code is updated and empty Psake tasks are successfully executed in each of your Jenkins jobs. If you want you can trigger an instance of the pipeline only on scheduled time instead of commit hook integration.

Here is the list of the articles about each stage implementation:

Related links:

https://github.com/psake/psake (Psake project site)

http://jenkins-ci.org/ (Jenkins home site)

http://www.amazon.com/Continuous-Delivery-Deployment-Automation-Addison-Wesley/dp/0321601912

https://github.com/JamesKovacs/psake/wiki/How-can-I-integrate-psake-with-Hudson%3F (psake and Jenkins integration)

Jenkins plugins:

https://wiki.jenkins-ci.org/display/JENKINS/Build+Pipeline+Plugin

https://wiki.jenkins-ci.org/display/JENKINS/Subversion+Plugin

Excellent article. Few articles are there on the internet, that actually help you in getting a clear concept about the topic, and they are full of intent. That surely helps you a lot in your learning process. That article is from one of them. Well explained and well-managed article. One thing to add, for the base64 decode and encode, you also check that link url-decode.com/tool/base64-decode, with dozens of other web utilities, are available under the same link.

ReplyDelete