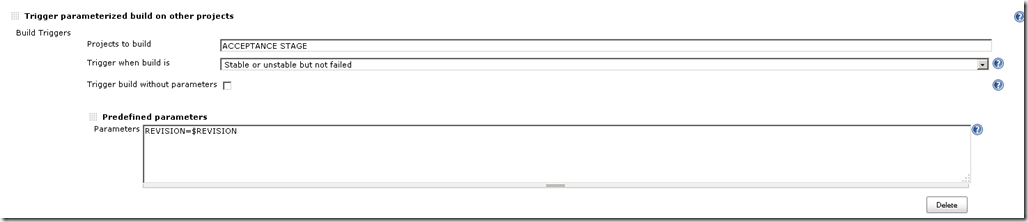

The “ACCEPTANCE STAGE” is the third job in my delivery pipeline.

Please, refer the general article for setting up the delivery pipeline with Jenkins and Psake here.

You can take a look on the article dedicated to preceding jobs in my pipeline for build stage here and commit stage here.

The primary job of acceptance stage is to execute acceptance testing, which in my case is based on Selenium. Its job can be summarized with 2 major activities:

- Deploy the artifacts that are output of the build stage instance, and that are tested by the commit stage instance.

- Executing the Selenium tests with NUnit

Deployment task itself is comprised of 2 sub-tasks:

- Deploying the Web Application to dedicated Front-End server, that is not the build-server.

- Deploying the database with SSDT to dedicated database server, that is not the build-server.

The “build” step is quite simple in this case. It contains only “build.cmd”, that was reviewed in the previous articles.

The functionality related to deploying web application and database are executed in “acceptancestage.ps1”.

If you don’t use “msbuild” command line for deploying your web packages to dedicated machine ( that is accessible in your network), you have to implement this with custom programmatically logic.

In my case I did it with Powershell WebAdministration module.

So, the cmdlets from the module that creates web site and applications have to be executed in the context of the machine that they will reside in. And this machine is different than the build machine that host Jenkins and triggers the instance of the “ACCEPTANCE STAGE” job.

One possible option to fulfill this is to build web service, responsible for deployment and web site creation. It can be invoked by the build machine. Unfortunately, this implies to more development efforts, integration and authentication concerns, which technically becomes a complication in the delivery pipeline.

The other approach (the one I chose) is to use PowerShell remoting, which will allow me to call the cmdlets from WebAdministration module in the context of the web server, where I want to deploy my web package.

There is a prerequisite for this. The front-end server (webhost in the script), should expose shared folder, in which the artifacts will be copied before executing the deploy DeployWebProject.ps1 with remoting.

The script uses WebAdministration module of IIS, loops through all published web sites on the front-end server in order to extract and calculate the port of new site that is to be published.

Then New-Website and New-WebApplication command are used. After the web application is deployed, its web config is modified , so the connection string has been properly set up.

DeployWebProject.ps1 is located on the Jenkins server, but if it refers other scirpts or resources they should be placed on the “remote” front-end server.

task DeployApplication {

$webhost = "\\webhostIP"

$webhostPassword = "webhostPassword"

$dbhost = "\\dbhostIP"

$dbhostPassword = "dbHostPassword"

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Start DeployApplication task..."

If ($revision)

{

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Start deploying application..."

$p = Resolve-Path .\

#Authenticate as User1 with needed privileges.

$password = convertto-securestring $webhostPassword -asplaintext -force

$credentials = new-object -typename System.Management.Automation.PSCredential -argumentlist "webhost\User1",$password

#copy artifacts

Write-Host "Moving artifacts packages on the front-end server hard drive..."

$artifacts_directory = Resolve-Path .\Artifacts\$revision\Source\Packages

NET USE "$webhost\ci\$revision" /u:webhost\User1 $webhostPassword

robocopy $artifacts_directory "$webhost\ci\$revision" WebProject.zip

net use "$webhost\ci\$revision" /delete

Write-Host "Moving artifacts done..."

#copy deploy scripts

$deployScriptsPath = Resolve-Path .\"DeployScripts"

NET USE "$webhost\CI\powershell" /u:webhost\User1 $webhostPassword

robocopy $deployScriptsPath "$webhost\CI\powershell" PublishWebSite.ps1

net use "$webhost\CI\powershell" /delete

$dbServer = "dbServerConnectionStringName"

$dbServerName = "dbServerName"

$sqlAccount = "sqlAccount"

$sqlAccountPassword = "sqlAccountPassword"

invoke-command -computername webhostIP -filepath "$p\DeployScripts\DeployWebProject.ps1" -credential $credentials -argumentlist @($revision, $dbServer, $revision, $sqlAccount, $sqlAccountPassword)

Write-Host -ForegroundColor "Green" -BackgroundColor "White" "Start deploying database..."

#...pretty much the same

}

else

{

Write-Host "Revision parameter for the Acceptance Stage job is empty. No artifacts will be extracted. Job will be terminated..."

throw "Acceptance Stage job is terminated because no valid value for revision parameter has been passed."

}

}

The link below gives explanation how the credentials might be stored encrypted in a file, rather than being used plain text in ps1 script.

http://blogs.technet.com/b/robcost/archive/2008/05/01/powershell-tip-storing-and-using-password-credentials.aspx

Below is the beginning of the DeployWebProject.ps1 script:

param(

[string]$revision = $(throw "revision is required"),

[string]$dbServer = $(throw "db server is required"),

[string]$dbName = $(throw "db name is required"),

[string]$sqlAccount = $(throw "sql acocunt is required"),

[string]$sqlAccountPassword = $(throw "sql account password is required")

)

$p = Resolve-Path .\

Write-Host $p

Set-ExecutionPolicy RemoteSigned –Force

robocopy with impersonation is used, because the context of the Jenkins jobs has not permission over the shared folder by default. The context is the user that runs the windows service.

robocopy copies the artifacts from C:\CI\Artifacts\$revision to $webhost\ci\$revision (this might be created dynamically by the deploy ps1 script).

After the web deployment is completed the shared folder content is cleared.

Deploying database uses pretty much the same approach. Artifacts that are need here are:

- Dacpac file

- Publish database profile file

- Init.sql scripts that can be used for creating the initial data needed for the web application to be operational.

In order to generate database publish profile, open up your ProjectDB.sln and right click on the SSDT project (named ProjectDB) -> Publish.

The click “Save Profile As…” and save the file as ProjectDB.publish.xml. The file is stored on the Jenkins file system.

Below is sample content of the file:

<?xml version="1.0" encoding="utf-8"?>

<Project ToolsVersion="4.0" xmlns="http://schemas.microsoft.com/developer/msbuild/2003">

<PropertyGroup>

<IncludeCompositeObjects>True</IncludeCompositeObjects>

<TargetDatabaseName>4322</TargetDatabaseName>

<DeployScriptFileName>4322.sql</DeployScriptFileName>

<TargetConnectionString>Data Source=dbserver;Initial Catalog=xxx;User ID=xxx;Password=xxx;Pooling=False</TargetConnectionString>

<ProfileVersionNumber>1</ProfileVersionNumber>

</PropertyGroup>

</Project>

Artifacts are copied to shared folder $dbhost\CI\$revision on the database server. Then, again PowerShell remote execution is used alike for web site deployment.

The publish xml is copied on the $dbhost\CI\$revision folder from the Jenkins machine file system location. Its content and connection string are adjusted. The newly created database has the name of the revision number, so it can be easily recognized when troubleshooting is required. Also the publishing profile name is renamed to ProjectDB.publish.$revision.xml, after it is copied to the appropriate folder.

Below is the deployment script for the database:

param(

[string]$revision = $(throw "revision is required"),

[string]$dbServer = $(throw "db server is required"),

[string]$sqlAccount = $(throw "sql acocunt is required"),

[string]$sqlAccountPassword = $(throw "sql account password is required")

)

$pathToPublishProfile = "C:\CI\{0}\ProjectDB.publish.{0}.xml" -f $revision

$dacpacPath = "C:\CI\{0}\ProjectDB.dacpac" -f $revision

$remoteCmd = "& `"C:\Program Files (x86)\Microsoft SQL Server\110\DAC\bin\SqlPackage.exe`" /Action:Publish /Profile:`"$pathToPublishProfile`" /sf:`"$dacpacPath`""

$sqlInit = "sqlcmd -S {0} -U {1} -P {2} -d {3} -i `"C:\CI\{3}\ProvideInitData.sql`"" -f $dbServer, $sqlAccount, $sqlAccountPassword, $revision

Invoke-Expression $remoteCmd

Invoke-Expression $sqlInit

Write-Host "Creating database finished.."

As you can see the artifacts are referred as local resources to db server, regardless the DeployDB.ps1 is executed from the build server.

After successful deployment the temp content $dbhost\CI\$revision and $webhost\CI\$revision is cleaned up and the folders are deleted.

At this point you should be able to browse your recently deployed web application, to log in and to work with it. Dedicated database named with the revision number is created for each web deployment.

2. Execute Selenium tests

In the previous article I showed how unit tests can be executed and integrated in Jenkins with bat file.

Here is how i did it with PowerShell.

$nunitProjFile = "$p\Artifacts\{0}\Source\Automation\WebProject.SeleniumTests\SeleniumTests.FF.nunit" -f $revision

$outputFile = "$p\Artifacts\{0}\Source\Src\Automation\WebProject.SeleniumTests\console-test.xml" -f $revision

$nunitCmd = "& `"C:\Program Files (x86)\NUnit 2.6.3\bin\nunit-console-x86.exe`" $nunitProjFile /xml:$outputFile"

Write-Host $nunitCmd

Invoke-Expression $nunitCmd

Write-Host "exit code is " $LASTEXITCODE

if ($LASTEXITCODE -ne 0)

{

throw "One of the selenium tests failed. The acceptance stage is compromised and the job ends with error"

}

Before executing the selenium tests, related connection strings must be programmatically modified to point the correct database and web address of the deployed in previous step web application.

Selenium server should be started on the Jenkins machine in order tests to be successfully executed.

When even one of the tests fail, the job is terminated completes as failed.

Related links:

https://wiki.jenkins-ci.org/display/JENKINS/Copy+Artifact+Plugin

https://wiki.jenkins-ci.org/display/JENKINS/Email-ext+plugin

http://technet.microsoft.com/en-us/magazine/ff700227.aspx - how to enable PS remoting

Read full article!