Well, if you need to develop all these features from scratch, you will end up with tons of javascript files and code behind methods for building the complex interface. Most likely you will also have css file with a quite big content. And since the web part is pretty much custom control, debugging what it renders is pain in the butt.

I had the same concerns and decided to implement my web part using jqGrid, which pretty much gives out-of-the-box the sorting, paging and filtering. Plus it is easy configurable in few lines javascript.

Here I am going to explain the steps and twists I did in my implementation.

The solution is not specific to SP2010, so you can implement it easily in SP 2007 too. It demonstrates the usage of jqGrid, with pop ups and hyperlinks in the cells. The content of the pop up is created dynamically on the server side depending on the clicked row.

I am using WSPBuilder for project template and the PoC scenario is: Based on Customers SPList we want to create a web part which presents its data, and adds a modal dialog representing orders history for every customer from the list. We’d like our web part to support – sorting, paging, and filtering, and to have decent look-and-feel.

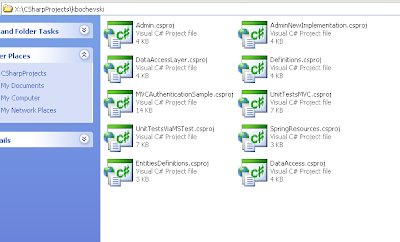

After creating the structure of the project, the solution should pretty much looks like on the screen shot below:

Following the samples of jqGrid we need to build similar output (as html) in order to get this working.

Check the demo of jqGrid for details at http://www.trirand.com/blog/jqgrid/jqgrid.html.

HTML

...

<table id="list2"></table>

<div id="pager2"></div>

This can be easily achieved in the CreatedChildControls method. By using HtmlTable, HtmlTableRow, HtmlTableCell, HtmlGenericControl the needed output can be generated at server side.

There is a little twist as it comes to the pop ups. The pop ups should be user controls placed in the ControlTemplates folder. Ideally, they should keep some presentation and persistence logic in their code behind. In order to get them smoothly displayed in consistent to jqGrid manner, we need to use jquery dialog function. Also, in order to display data related to particular SPListItem, we need to pass the SPListItem.ID as a parameter of the function which takes care of the visualization.

For rendering the jqGrid next js functions and styles are used:

• jquery-1.4.2.min.js

• jquery-ui-1.8.5.custom.min.js

• /jqGrid/js/i18n/grid.locale-en.js

• /jqGrid/js/jquery.jqGrid.min.js

• SampleWebPart.js

• OrdersHistoryDetail.js

• jquery-ui-1.8.5.custom.css

• jqGrid/css/ui.jqgrid.css

We can inject all of our javascript functions and styles using RegisterStartupScript of ClientScriptManager.

clientScript.RegisterStartupScript(typeof(Page), "jQueryJQWebPart_UI", JQueryGUILibrary);

public string JQueryGUILibrary

{

get

{

return @"<script src='" + _jqueryMainFilePath + "' type='text/javascript'></script>" +

@"<script src='" + _jqueryUIFilePath + "' type='text/javascript'></script>" +

@"<script src='" + _jqueryGridLocalePath + "' type='text/javascript'></script>" +

@"<script src='" + _jqueryGridPath + "' type='text/javascript'></script>" +

@"<script src='" + _jqGridWebPartFilePath + "' type='text/javascript'></script>" +

@"<script src='" + _jqGridWebPartOrdersHistoryFilePath + "' type='text/javascript'></script>" +

@"<link href='" + _jqueryUICss + @"' rel=""stylesheet"" type=""text/css"" />" +

@"<link href='" + _qGridCss + @"' rel=""stylesheet"" type=""text/css"" />";

}

}

Since our pop ups are ASCX controls, we need to load them and add them to the rendered content “hidden”. Once the user calls the pop up visualization, we can display them and load their content from SP content database.

Now it comes the tricky part. We need not only a way to display the ascx control by passing its id to the dialog() function. We need also to secure a mechanism for displaying its content dynamically on the server side, depending on which row from the grid is chosen.

Bear in mind that control’s client id is generated on the server side by the time control is added to the web part output. And on the client side it will be something like: ctl100_....We’d like to avoid this, so in this sample I inject the generated client ids in the rendered content.

Next code injects the function that visualizes the user control in the host aspx of the web part.

public string PopUpJsFunctions

{

get

{

return @"<script type=""text/javascript"">

//<![CDATA[

function displayUserControl(contentDivId, selectedListItemID)

{

CallServer(selectedListItemID);

$('#' + contentDivId).dialog();

}

//]]>

</script>";

}

}

Now, the question is how to get the specific content of the user control and to display it in the user control dialog prior to its visualization. Here comes in help the ICallbackEventHandler, which implementation provides asynchronous post backs to the server by sending only user defined information, rather the all data passed on “full” post back. In our case we will pass the SPListItem.ID, which we keep in the grid’s store, and will return the specific content of the details pop up. This content fully depends on the SPListItem in the correspondent SPList and will be generated on the server side. It looks exactly like what we are looking for. So, let’s make our web part implements the ICallbackEventHandler interface.

public class SampleJQueryWebPart : WebPart, ICallbackEventHandler

public string GetCallbackResult()

{

return DynamicOrdersHistoryForm(selectedListItemID);

}

public void RaiseCallbackEvent(string eventArgument)

{

selectedListItemID = Convert.ToInt32(eventArgument);

}

The dynamically generated html is very simple, and it only aims to demonstrate the PoC.

On the OnLoad in our custom web part we can inject the client side functions with its callback.

//dynamic callback on clicking (...) in Orders history column for each row in the jquery grid

String cbReference = clientScript.GetCallbackEventReference(this, "arg", "ReceiveServerData", "");

String callbackScript = "function CallServer(arg, context) {" + cbReference + "; }";

clientScript.RegisterClientScriptBlock(this.GetType(), "CallServer", callbackScript, true);

That is!

Another tricky moment is the way we feed the jqGrid with json formatted result. Since it is ASPX web forms alike, the most convenient and reasonable way to do so is by using generic handlers.

In order to make the generic handler working in our case we need to do next:

Create a new ashx file in your Layouts folder (in our case in specific folder within Layouts)

Remove its cs file and place it in the Code folder of the solution.

Go to the markup and do next change:

<%@ WebHandler Language="C#" CodeBehind="GetSampleGridContent.ashx.cs" Class="CustomWebPart.SharePointRoot.TEMPLATE.LAYOUTS.JQWebPart.GetSampleGridContent" %>

Becomes:

<%@ WebHandler Language="C#" Class="CustomWebPart.Code.GenericHandlers.GetSampleGridContent, CustomWebPart, Version=1.0.0.0, Culture=neutral, PublicKeyToken=59c2732ac8e0deaf"" %>

In order go get paging working properly we need a wrapper object which will present the data in expected by the jqGrid format:

public class CustomersData

{

public int Total { get; set; }

public int Page { get; set; }

public int Records { get; set; }

public List

}

CustomerEntity class should have the very same properties as those enumerated in colModel of the grid.

I won’t go into details of the implementation. You can download the code of this article and review it.

I have one more class called CustomersGUItHelper, which provides search, filter functionality and instantiates the CustomersData object which is serialized in json format and returned to the GUI by our generic handler.

MemoryStream stream = new MemoryStream();

DataContractJsonSerializer ser = new DataContractJsonSerializer(typeof(CustomersData));

ser.WriteObject(stream, jsonData);

stream.Position = 0;

StreamReader sr = new StreamReader(stream);

var json = sr.ReadToEnd();

context.Response.Write(json);

context.Response.End();

On the client side we have major 2 javascripts only:

• SampleWebPart.js

This file is contains the definition of the jqGrid.

• OrdersHistoryDetail.js

For the PoC I have created this files contains only the callback function on the client site. Ideally it should contain also the validation functions, and the save functions (if we assume they are implemented with ajax post).

function ReceiveServerData(result, context) {

var divContent = $("#" + varDetailsControlContainerId);

divContent.html(result);

}

Here are the steps for installing and uninstalling our solution:

Install steps:

1)Add-SPSolution

C:\Users\kbochevski\Desktop\jqGridSPWebPart\CustomWebPart\CustomWebPart\CustomWebPart.wsp

2)

Install-SPSolution -Identity 6195c1de-8e41-4537-a66d-e93b10d22f25 -GACDeployment -Local -WebApplication SPKaloyan -Force

3) Go to http://your_web_app/_layouts/newdwp.aspx, find the web part, mark it and click “Populate the gallery”

4) Create sample page and add the web part in it.

http://your_web_app/SitePages/jqGridSampleWebPart.aspx

Uninstall steps:

1)

Uninstall-SPSolution -Identity 6195c1de-8e41-4537-a66d-e93b10d22f25 -Local -WebApplication SPKaloyan

2)

Remove-SPSolution

6195c1de-8e41-4537-a66d-e93b10d22f25

After deploying the solution and creating a sample page which hosts the custom web part, it should look lie this:

We can review the orders history for every customer in the web part by clicking the (…) hyperlink in the “Orders History” column.

We can filter (search) the customers by Name. This is configurable in SampleWebPart.js. I have implemented this functionality for one column only.

Our custom web part gets its data from the custom list called Customers.

The same concept may be applied to another ajax frameworks like extjs for example. You can now create custom web parts based on ajax controls, which gives you decent look-and-feel and powerful client side functionality.

You can download the code related to this article here.

Read full article!