If you have a .NET Web API project that is using CSOM to communicate with SharePoint 2019, and you want the API to be documented with Swagger (Swashbuckle) you may end up getting the following error:

How to reproduce the problem:

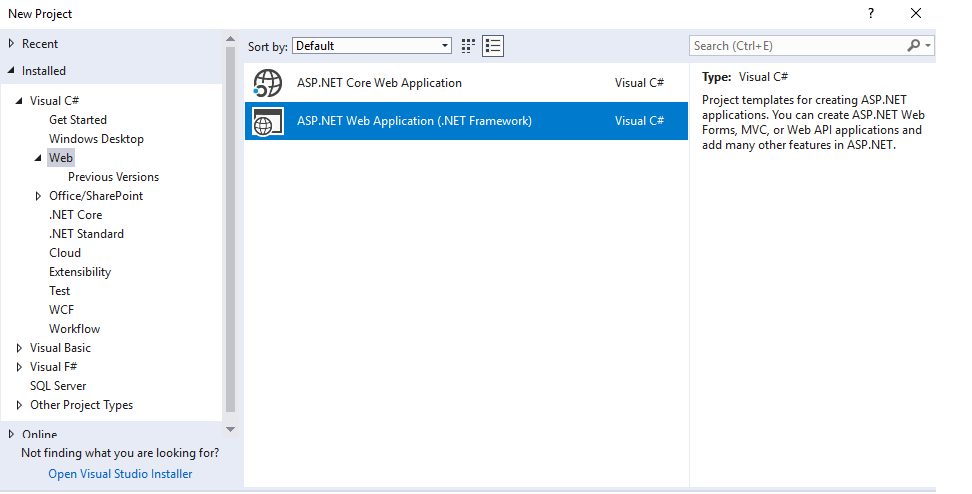

- Create new ASP.NET Web Application. You can keep the defaults settings.

- Install Swashbuckle NuGet package.

When you start your application and access the Swagger endpoint, you will see your default API controller has been documented.

- Install Microsoft.SharePoint2019.CSOM NuGet package

When you now try to browse the swagger documentation, you get the following error:

500 : {"Message":"An error has occurred.","ExceptionMessage":"Not supported by Swagger 2.0: Multiple operations with path '_vti_bin/DelveApi.ashx/{version}/groups' and method 'GET'. See the config setting - \"ResolveConflictingActions\" for a potential workaround.

Swagger is trying to document the DelveApi endpoints coming from SharePoint.

You can see the OOTB API documentation is listing quite a few DelveApi endpoints.

How to fix it

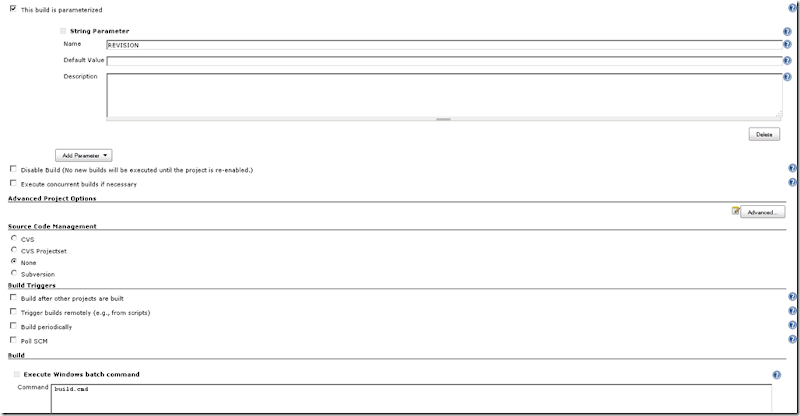

1. In SwaggerConfig.cs and make the following changes2. Implement IDocumentFilter and modify the EnableSwagger configuration in SwaggerConfig.csusing System.Linq;

- add reference to Linq

c.ResolveConflictingActions(apiDescriptions => apiDescriptions.First());

- in EnableSwagger action add the following line of code

GlobalConfiguration.Configuration.EnableSwagger(c =>{c.SingleApiVersion("v1", "WebApplication1");c.ResolveConflictingActions(apiDescriptions => apiDescriptions.First());c.DocumentFilter<SwaggerDocumentFilterSharePoint>();}).EnableSwaggerUi(c =>{c.DocumentTitle("Web Application REST");});

public class SwaggerDocumentFilterSharePoint : IDocumentFilter

{

public void Apply(SwaggerDocument swaggerDoc, SchemaRegistry schemaRegistry, IApiExplorer apiExplorer)

{

var paths = new Dictionary<string, PathItem>(swaggerDoc.paths);

swaggerDoc.paths.Clear();

foreach (var path in paths)

{

if (!path.Key.Contains("_vti_bin/DelveApi.ashx") && !path.Key.Contains("/api/DelveApi"))

swaggerDoc.paths.Add(path);

}

}

}

Read full article!